Blog

Blog

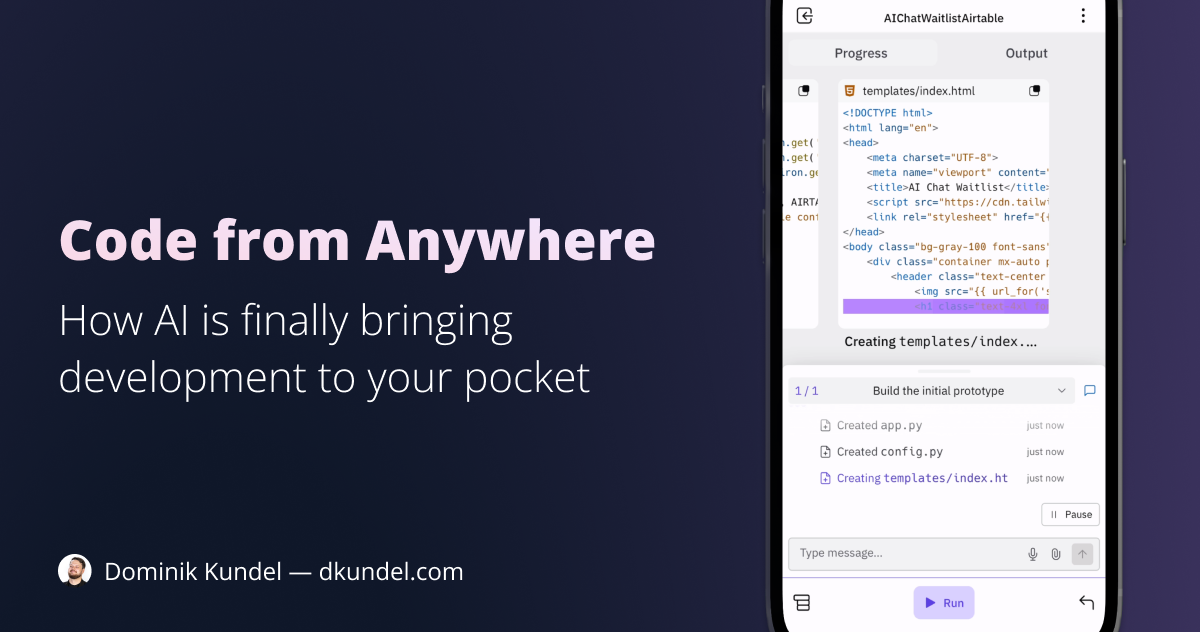

Code from Anywhere: How AI is Finally Bringing Development to Your Pocket

Recently I was sitting in a waiting room with an expected eight-hour wait. While scrolling through social media on my phone, I suddenly had an idea for a project, but my laptop was nowhere near. I could have done what I (and probably you) have done before when an idea strikes while I’m out and about: open my notes app and write it down, only to have it get buried and never seen again.

Instead, I decided to try out the new Replit Agent, which had launched on mobile just a few days earlier. I gave it a prompt, it devised a plan, and then started executing it by installing dependencies, creating checkpoints, asking me to verify steps, and ultimately offering to deploy it. While far from perfect, it was a magical experience that made me think that, thanks to AI, we might be closer than ever to a world where anyone can code from anywhere, whether it’s at home on your laptop or in a waiting room on your phone. In this blog post, we’ll go through the history of development on mobile, how we got here, and how I believe we might finally get there with the help of AI.

History

The idea of being able to build and write code from anywhere using your smartphone or tablet is probably as old as these devices themselves. Every once in a while there is a new app trying to take a stab at bringing development mobile devices but nothing has ever truly made it. In October 2022 Replit launched a mobile app. A few months before that CodeSandbox launched support for a mobile app after having acquired Play.js the year before. And even prior to that GitHub launched a mobile app but focused it more around code browsing and doing PR reviews on the go.

Overall it seems like the mobile app space for developers is still in its infancy but yet in a recent StackOverflow survey 8.4% and 7.3% of developers used Android or iOS respectively as their primary operating system for professional work 1. Meanwhile the global market share of mobile phones is 62% vs 35% for desktops in August 2024 2.

While we haven’t seen the shift to more development on mobile, there is currently a clear transformation in how we write code. 62% of developers according to the 2024 StackOverflow survey are already using AI in their development workflow 3. Some also call this new paradigm Chat-oriented Programming (CHOP). It’s beginning to change not just how current developers are writing code but also how new people can learn to build. My friend Ricky’s 8-year old daughter recently went viral for building an app from scratch using the AI-powered editor Cursor.

What can an 8-year-old build in 45 minutes with the assistance of AI?

— Ricky (@rickyrobinett) August 19, 2024

My daughter has been learning to code with @cursor_ai and it’s mind-blowing🤯

Here are highlights from her second coding session. In 45 minutes she built a chatbot powered by @CloudflareDev Workers AI 👀 pic.twitter.com/MJ6vAlmvnj

The problem with coding on mobile devices

To understand why AI might change what devices we use to write code, we first need to understand the challenges with writing code on mobile devices. I’ve long been interested in this problem, in fact back in 2014 my Google Summer of Code proposal was to design a mobile-first environment for the educational coding app CodeCombat.

In general the two biggest problems with developing on mobile devices are:

Code is incredibly verbose

If you’ve ever tried to write a long text on your phone you probably already struggled a fair amount using the small keyboard as it’s often slower and results in a lot of typos. Writing code on a mobile device is even worse. Most of the common symbols that you need for writing code (like { or } or ;) are hidden behind additional layers of your keyboard.

On top of that most programming languages are not really “space concious” when it comes to screen real estate. They tend to use a lot of whitespace and newlines to make code generally more readable but not necessarily on small screens.

Some mobile development apps have tried to tackle this by using special keyboards or creative controls. Replit for example introduced features like their “coding joystick” that was aimed writing and navigating code.

Writing code is just part of the equation

Building an app is also not limited to writing code and running it. It often involves leveraging additional tools like running a database, running a local dev server, installing additional dependencies/services and more. On your computer this typically results in multiple open terminal sessions, several windows and sometimes even multiple monitors. On the other hand just switching between multiple apps on a mobile device like an iPhone can become cumbersome, let alone having the different apps talk to each other.

As a result creating development environments for mobile is not just a matter of porting a code editor to iOS and Android but to go back to first-principles thinking to build a new development environment from the ground up.

Cloud development environments walked so AI could run

Even older than the idea of developing on your smartphone is the idea of “cloud development environments” (CDEs) and we’ve gone through various levels of sophistication over the last 15+ years. From early offerings like PHPanywhere in 2009 — essentially a web-based FTP client that would later become Codeanywhere — over web dev tools like CodePen and JSFiddle to full own instances & forks of Visual Studio Code running in the browser connected to a remote server — incl. as GitHub Codespaces, CodeSandbox or Project IDX — the level of sophistication of these tools has increased dramatically.

At the same time though, the reality is that while some of these tools gained some traction especially for developer education purposes such as sample applications or to make contributing to open-source easier, they haven’t really taken off as a mainstream development tool. The reasons for this area likely multifaceted but we’ll cover some of them in a later section.

The fact is that without the concept of CDEs, development on mobile devices would still be incredibly impractical. A lot of the challenges that CDEs had to solve are the same that made a full development flow on mobile devices hard: running multiple different processes & tools at the same time, handling multi-tasking while being restricted to a single window, exposing a development server that the user can access, dealing with hardware & software limitations on the device the developer is accessing the CDE from, etc.

At the same time, CDEs on their own don’t solve the mobile development problems as David East, Lead DevRel of Project IDX, shows in a post.

What’s stopping you from coding like this? pic.twitter.com/vujhxHrZnB

— David East (@_davideast) September 27, 2024

How AI Agents are changing the development game

With having an overall “integrated” development flow seemingly being a hard but solved problem, the remaining challenge is comes down to the user experience of writing and navigating code. Enter: Generative AI.

If you are a developer the chances are high that you’ve had an LLM (Large Language Model) like OpenAI’s GPT-4o or Claude’s 3.5 Sonnet write code for you at least once in the last three years. In fact according to the StackOverflow survey from 2024, 82% of developers who used AI tools used it to write code, even if only ~42% trust the AI code 4.

With LLMs’ continuous advancements in their coding abilities, syntax will no longer be a barrier to writing code and enable to focus both engineers and non-engineers to focus on semantics and the problems at hand instead.

The same goes for navigating code. With the help of LLMs, developers can now ask questions like “what does this function do” or “what does this variable do” and get a response back. This is especially useful for developers who are not familiar with the codebase and need to understand what a particular function or variable does. It also reduces the overall need of reading large amounts of code to understand the overall relationship and structure of your codebase.

Where these capabilities get supercharged though is when we introduce the concept of AI Agents. AI Agents are systems that can not just generate text/code but actually plan and perform tasks. In the case of developing software these tasks can include things like:

- Editing code

- Running command-line tools to install dependencies, run tests, etc.

- Deploy code to a server

- Search the web for additional documentation

- etc.

Some promising early versions of programming specific AI Agents are Cognition’s Devin, GitHub Copilot Workspace or Replit Agent.

Development coming to the phone near you

With these two technology changes of CDE’s and AI Agents that can write and edit code as well as execute other commands, the foundation of mobile development flows is in place and we are beginning to see the fruit of this labor.

Anthropic’s Claude added support for their “artifacts” feature to their mobile app that allows for Claude not just to write code but also render the code for you. Unfortunately that capability is limited to front-end code but it can still be incredibly powerful for you to visualize your idea on the go and iterate on it before you come back home to implement it.

When GitHub Copilot Workspace was announced in April 2024 they immediately announced support for their GitHub mobile app alongside it.

Replit’s mobile app takes this all one step further though by enabling the mobile app to essentially perform the same tasks you would complete on a desktop in a smaller form factor. It can install dependencies, run both front-end and back-end code, devises a plan, creates checkpoints along the way (probably more often than you remember to run git commit), even handle environment variables for you and ultimately deploy the app for you.

The potential for an exciting future

Whether the current contenders are going to be the ones to win the race or not, I think the future of writing code is incredibly exciting. Smartphones have long taken over computers and laptops for entire generations and communities as the gateway to the world. Writing code on mobile devices could no longer be just a gimmick but in combination with AI it could enable people to build who were previously held back not by lack of ideas but through access and coding ability.

For the concept of coding from anywhere to become a reality for professional & widespread use though we still have ways to go and questions to answer. Apart from continuing to improve Generative AI models ability to write code, because these tools require a more integrated approach to development, they need to:

- provide a seamless experience across mobile and desktop

- integrate with the vast ecosystem of tools that developers already use

- pass the rigorous security and privacy standards of the companies whose developers who will be using them

- continue to iterate on the user experience of mobile editing

- have the tools be accessible to developers both at work and at home because developers try to bring their personal tools to work

While the technology is still not quite ready for every use case, we are getting faster than ever to a world where we can have an idea on the go that we can immediately begin to turn into reality, no matter where we are are using just text or voice and our phone.

So the next time an idea strikes, give a mobile AI code editor, like those mentioned above, a try and build right from your phone. Hit me up afterwards; I’d love to hear what you built!

Thank you Greg Bauges, Ricky Robinett and Craig Dennis for reviewing early versions of this article.